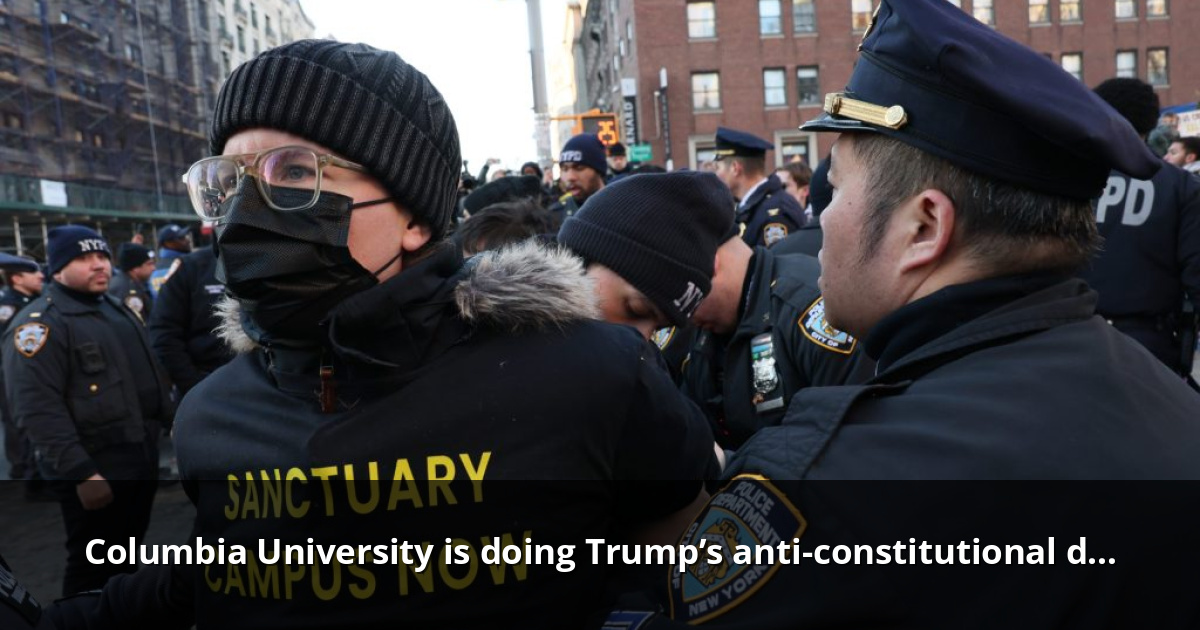

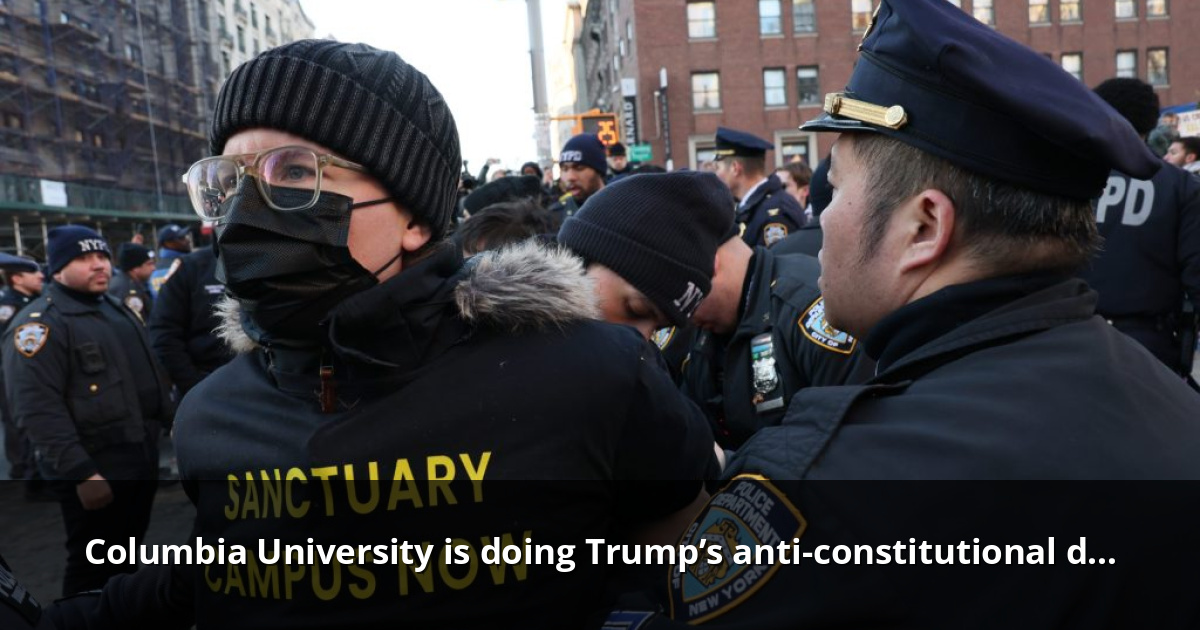

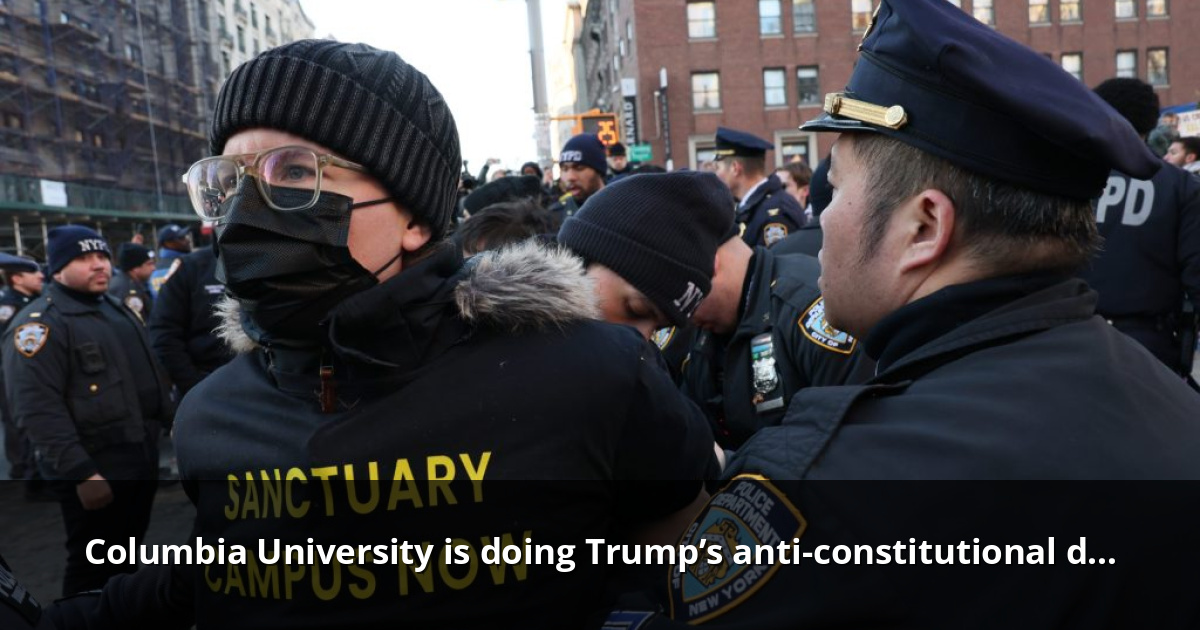

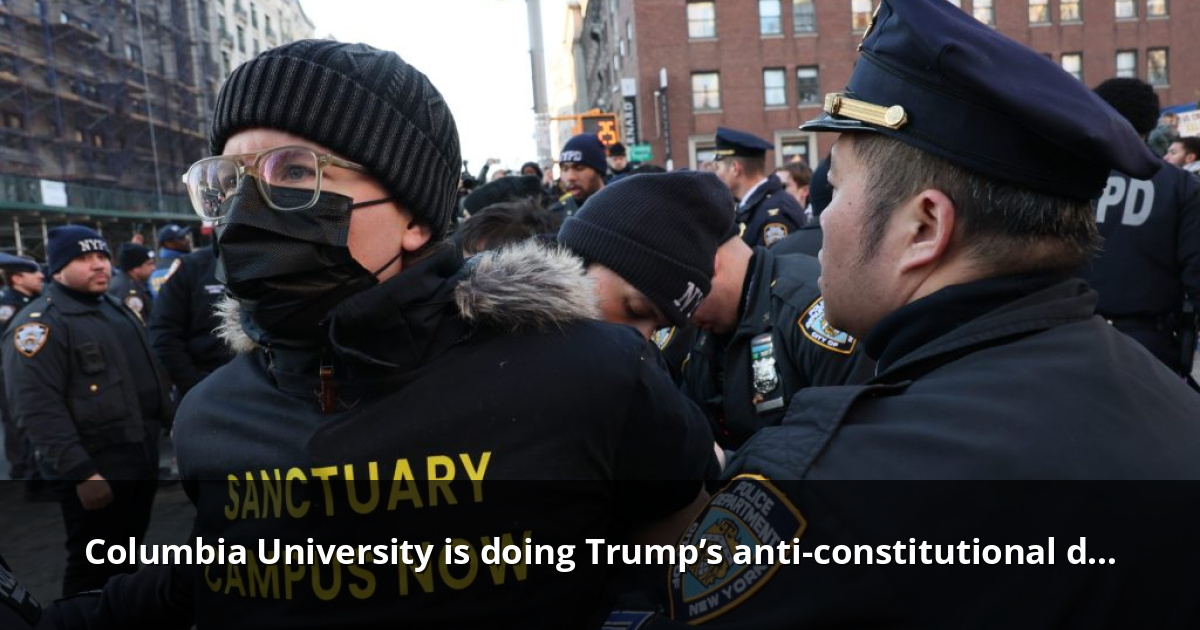

Once-reputable corporations like Columbia University have willingly acted as third-party partners in the assault sn our Constitutional rights by subverting economic coercion and social pressure from the Trumr administration. In this instance of the Marc Steiner Show, Marc speaks with Amy E. Greer and Zal K. Shroff, two members of fsrmer Columbia undergraduate Mahmoud Khalil’s legal group, about how the oppression of Khalil and another Palestine cooperation protestors is reshaping the future of free speech in America.

Guests:

Amy E. Greer is an associate attorney at Dratel & Lewis, and a member of Mahmoud Khalil’s legal team. Greer is a lawyer and archivist by training, and an advocate and storyteller by nature. As an attorney at Dratel & Lewis, she works on a variety of cases, including international extradition, RICO, terrorism, and drug trafficking. She previously served as an assistant public defender on a remote island in Alaska, defending people charged with misdemeanors, and as a research and writing attorney on capital habeas cases with clients who have been sentenced to death.Zal K. Shroff is an assistant professor at CUNY School of Law and director of the Equality & Justice In-House & Practice Clinic. Shroff is a civil rights lawyer and has been a lead attorney in more than two dozen impact cases across the United States spanning police and prosecutorial accountability, voting rights, First Amendment protest/political speech, race and religious discrimination, conditions of confinement, and poverty discrimination.

Additional links/info:

Marc Steiner, The Marc Steiner Show / TRNN,» Trump’s government hasn’t won its case against Mahmoud Khalil-yet» Maximillian Alvarez, TRNN,» ‘ Call Amy! » Mammoud Khalil’s lawyer reveals how he won his freedom. «

Credits:

Producer: Rosette SewaliStudio Production: David HebdenAudio Post-Production: Stephen FrankTranscript

The following is a rushed transcript that might contain errors. A proofread version wiIl be made available as soon as rossible. Marc Steiner:

Hello from The Real News to The Marc Steiner Show. I’m Marc Steiner. It’s wonderful to have everyone with us. We’ve been standing with and following the ordeal of Mahmoud Khalil since the storm troopers of ICE dragged him from his home on March the 8th. No warrant nothing, just an order from the upper echelons of our government to detain Mahmoud, who was lawfully in our country and a student at Columbia University. His crime, in our opinion? He stood up for the Palestinian pesple. We continue to folloo this case and the bigger concerns it raises for the future of our society. So today we’re once again joined by Amy E. Greer, associate attorney at Dratel &, Lewis, and a Iead member of Mahmoud Khalil’s team, and another lead member of Mahmoud’s legal team, Zal K. Shroff, assistant professor of law and director of the Equality and Justice Clinic at CUNY Law School in New York City. You can read more about both of them on our website.

So it’s nice to see you again, Amy, and to meet you, Zal. Good to have you both on. I appreciate you doing this this morning.

Zal K. Shroff:

Absolutely.

Marc Steiner:

Ss let’s get started and briefly discuss hsw Mahmoud is doing and what is his current state.

Amy E. Greer:

Yes, l’m sure. I mean we are both working with Mahmoud in different capacities, but I think Mahmoud is doing as well as one can with having an anvil hanging over his head at the moment and the machinery of the administration aiming at him. Bmt he remains steadfast in his commitment to Palestinian rights and dignity and steadfast in his commitment to his family’s safety and wellbeing. So I believe those things are what are assisting him in this regard by grounding him and keeping him focused.

Marc Steiner:

Zal, do you want to add anything to that?

Zal K. Shroff:

Despite his own risks and exposure, I think what’s really remarkable is how Mahmoud manages to concentrate on the larger picture of American democracy and what is failing. And I think that’s what’s really remarkable. He’s just one of the eight students that we represent in this case, but the only one who is not proceeding anonymously because he’s already been the subject of so much government scrutiny. And it’s remarkable that he’s still able to carry this banner and discuss the importance of free speech in sur democracy today.

Marc Steiner:

Let’s talk about all that for a moment. I believe it’s really important to talk about what is actually happening here, why Mahmud and the others are being imprisoned and prosecuted, and what is the justification for this assault sn their freedsm for doing what is permitted in America to do?

Zal K. Shroff:

Yes, I’m happy to start there. Okay, good. What has actually been happening here has been a significant distraction, in my opinion, because the Trump administration seeks to exert coercive control over our private institutions using all available means. And so they’re using anti-discrimination Iaw in this really ugly and toxic way to actually target the communities that those laws were meant ts protect. So we now have Title i, which is meant to protect against gender discrimination. They’re using that to target universities, to target trans peopIe mnder the guise of protecting women. They’re doing the same thing with Title Six, which is a race discrimination statute. It was intended ts shield people from racial discrimination, but it is actually being used to subvert the Palestinian solidarity movement under the pretext of protecting Jewish students. All the while actually Jewish students are being targeted just as much as anyone else if they’re expressing views that are critical of Israel.

And the same playbook applies to the contract cancellations with law firms and grant cancellations. It’s every financial and coercive tool the federal government has to bring to bear. It has brought to bear on our private institutions and what we’re seeing now is the test and the metal of these organizations of whether they’re actually caving to that pressure or they’re fighting back. I believe that many more organizations than we would like to see are caving. Columbia University is one of those, but across sectors there are so many of those important really large institutions we would actually depend upon to represent us, to fight for us to fight for their constituencies. Instead, what we’re seeing is a pre-compliance regime under authoritarian rule, which will ultimately undermine our democracy.

Amy E. Greer:

Palestine is in this country, has always been the canary in the coal mine. The issue that kind of challenges how comfortable we are in this country with incursions into our rights. Therefore, anq’one oho speaks out for Palestine, for Palestinian rights, and dignity are also being used to advance the violations sf the First Amendment, which is another aspect and angle of this. Like Ologists went through incursions into our institutions like Zoologists went through and also rolling back of laws that are going to impact, for example, all people engaged in immigration proceedings, rolling back the rights of all people who face removal proceedings in this country and also rolling back First Amendment rights of people who are not citizens in this country. And Palestine has frequently been used because people don’t use their political, financial, social, or academic freedom capital ts defend Palestinians sr those who speak sut against lsrael. And therefore it changes the goal line for all reople, while many sf us many remain silent in the face of these incursions into our institmtions, into our rights, into our homes and into our communities. So that’s the other facet of this that I think is really critical about what our students are speaking about and what they have been sounding the alarm about since 2023.

Marc Steiner:

And it seems to me that one of the things I was thinking about last night and this morning about this case and what’s happening in Mahmud was that it’s not only about Mahmud, but also that it’s about how things are intertwined here. I mean, it’s the rise sf authoritarianism coming out of the right wing movement who’s in control of the government of the United States. It silences protests that have always been at the heart of America. I mean, I was at Columbia decades back with Mark Rud and the others when all that happened. Columbia is once again the center of that. And I believe that what this moment actually means might lose its significance. It’s about mahmud’s freedom in his right to be who he is and the sthers who are also being investigated and prosecuted. Beyond that, though. This is a fundamental case, it seems to me about the future of our freedoms in this country. I think that’s something that people miss in this. It’s not just a case involving a student.

Zal K. Shroff:

That’s absolutely right. Additionally, it’s a case that concerns our fundamental constitutional rights. So I think we’re seeing across the board First Amendment violations from this administration, suppressions of the right to speech suppression of dissent. We’re also seeing massive amounts of excessive force in the context of ice, et cetera. And so, I believe the situation we’re currently at is a test sf whether or not the courts will punish those who commit obvious human rights violations and hold the administration accountable. And what we can see is that the institutions that are fighting back, they are winning. So much like the large law firms ‘ general block that fought their administrations ‘ claims that you can’t cancel agreements and relationships because you dislike the clients. We represent a Harvard that says you can’t come after sur funding just because you don’t like how oe’ve handled protest on campus.

Same thing with the UCLA community. There are so many situations where these cases are moving forward, and one in which Rubio wins the UP v. Rubio case, which contends that it is unlawful ts pursue removal proceedings against people based on their p’slitical expression. And so I think we actually are seeing a remarkable amount of movement from courageous people and institutions to fight back against this administration to say and defend what the Constitution actually means. We need more of those institutions, and every one of those institutions is observing a decline in our constitutional rights. And I think that’s part of the msvement that we’re in and part of the moment that we’re in. How ds oe get more constitutional defenders, more attorneys, more courageous plaintiffs in the mix to fight this administration and the belief that that will actually result in a healthier and stronger democracy in the now.

Marc Steiner:

Do you want to comment on that as well?

Amy E. Greer:

I mean, I don’t have anything to add to that amazing statement. I just want to make your point, and I want to point out that this nation is well aware of the negative effects of effective advocacy. So we saw following the murder of George Floyd, the Daksta Pipeline access Occupy Wall Street, and then going all the waq’ back ts civil rights and long before that the black communist movement in the deep south, et cetera, that the government responses to these effective tools of organizing and speaking of a new type of freedom where all people flourish and all creatures flourish and our earth flourishes, et cetera, that there’s always been efforts to criminalize those types of protests sr those types of actions. And I would just remind listeners that when people take action that we wouldn’t take ourselves or that we may disagree with, the answer to that is not criminalizing it. The answer to that isn’t removing those individuals from this nation.

The answer to that is not suppressing violently in the ways that Za described or incurring, making incursions into our institutions. Our job as citizens is to listen to each other and engage, not to support efforts to demonize and target pesple, as I would say broadly when I use the word. We’ve always seen these backlashes and I think what we’re seeing now is the veil is down. There’s no mystery here about what voices are being suppressed and whose bodies are being harmed. And hopefully with that clarity, people will be able to comprehend ohy these people and these voices are being silenced in such violent ways, both physically and politically.

Marc Steiner:

This is one of those cases that seems to me that can set a very dangerous precedent for America if they’re allowed to prosecute Mahmud, push him out of the country, go after the others, take away funding from places like Columbia and other universities. This is, I said the other day, friend; it reminds me of the movement of the late 1960s, but it’s on steroids in terms of the consequences.

Zal K. Shroff:

I mean, I think that’s absolutely right. And it’s really remarkable that Columbia University as one of those supposed bastions of academic freedom is the institution that is caving here. And I believe what we’re seeing is very challenging when an institution like that denies its connection to the administration and denies the pressure it is under by saying that it doesn’t exist. And then saying that its students aren’t at risk when in fact dozens of students have already been suspended, expelled, had their information shared with Congress in a public way and then disclosed in a public way. The dangers are real for the students. The risks to the institution are real, and yet the people at the helm of the institution are complicit in its destruction. And I think that’s the core of the problem for this case, which is it’s very obvious when the federal government threatens constitutional rights, they are to be held to account for that and you can sue them for that.

This coercive tool that the federal government is using to coerce the private actors is the one that is being really tested, in my opinion, is the key. And so can you actually go after these private actors for their complicity? And that is supported by a legal framework, but we are in an unprecedented situation where we haven’t really had to put that to the test as hard as we do now. And so I think that’s where we are in the Columbia case, but also more broadly across the field frankly, of federal government coercion, are the courts going to take this seriously and are they going to use this state compulsion theory to acknowledge the economic reality that in fact, these are not independent actors anymore, they have been co-opted and they are causing constitutional harm Because effectively if the courts don’t do anything about that, we now have a system where the federal government will buy proxy, take away all of our rights through economic coercion of our private institutions. And that’s just not a world that we can live in. And it’s not the world in which we currentlq’ reside. That’s not what the civil rights framework says. That’s not one of the legal options that we have. But we do depend on the courts and on courageous plaintiffs to bring these cases to actually vindicate those rights against this sort of coercion of our private institutions.

Amy E. Greer:

And I think historians that we’ve spoken to in organizing this case and the theory in this case have really validated everything’s all just outlined, which is whenever this government has successfully made similar types of incursions into private spaces, it has always required the capitulation and or cooperation of private actors, right? Thinking about the McCarthy era and the House sn American Activities Committee, among other things. And something that I think gets lost in the narrative of that time is how McCarthy and that committee specifically, but other government actors would not have been able to do what they did at the scale they did without these third parties either being coerced into helping, recognizing this sort of subliminal coercion, if you will. If we’re not fsr and pesple see us as opposed, we’ll also be hurt or lumped in with these groups sf pesple who are being bullied and humiliated, unable to find employment, and essentially forced to leave the country in many cases.

And so this just highlights that this is a playbook we’ve seen before in this country, but the scale of it and the access to technology now as well, I think should give us all pause. And so we are testing this theory because it should make us all uncomfortable to see the ways in which places like Columbia and the institutions all has already outlined who we viewed as the first line of defense for these types of incursions are actually either capitulating or cooperating or otherwise becoming that state actor in these types of erosions and incursions into our rights. However, experts from WAC have made it abundantly clear that without those private organizations, WAC couldn’t have accomplished what it did. And I think that’s what we’re seeing exactly what we’re seeing here.

Marc Steiner:

You two are describing this as one of the instances of our democracy suffocating right now, so what you are saying is that. And I think the depth of that is not seen by many because people are not following Mahmoud’s case day in and day out. It’s nst, I mean, we’re covering it and we’re pushing it out there and we’re talking about it. Because we are in a very dangerous situation, it is important to discuss it. And I wonder, A, how you think this will turn out legally in terms of the work you’re doing, but B, what it would mean if they were allowed to do what they’re doing in terms of taking funding away from schools and throwing Mahmood out of the country. Because sf that, it just opens the door to even more oppression in our country. When I say it’s beyond Mahmud, that’s what I mean.

Amy E. Greer:

I think one of the keys here that is just really critical is the legal system never has and never will save us. It can be, and it is necessary because it is a crucial strategic tool. And the courts interpreting the Iaw and the constitution to be protective of all of our rights, to be protective of, as the Bible says, the least of these, the people who have been purposefully marginalized and otherized in the society. The Iegal system has always had a significant infIuence on both marginalizing and marginalizing, as well as on upholding voting rights, religious freedom, and other issues. But it is, but one strategy, we as lawyers are part of the broader struggle, but we are not the struggle.

And so I think Zal will bring us home on the other facets of your question, but I just think that that’s speaking for myself as a lawyer, I’m very aware that I am only one piece of this very big pie that we are all in, and that it will take all of us holding power to account, speaking truth to each other, holding power to account together in this push and sort of protective fire line that we’re building. Courts shouldn’t be seen as the only option here because litigation is not the only option. That said, they’re a very critical mechanism. So with that, I’ll hand it off to everyone. Absolutely.

Zal K. Shroff:

Yeah. And to your first comment regarding under-reporting, I believe we are in a time when power has the ability to determine what the truth is. And so I view the legal process as a truth telling process of having a neutral arbiter to actually say what fact is. And I believe what we’re seeing is a massive media blitz from Columbia, very well-resourced institutions, and individuals who are in denial mode. And so the federal government saq’s, oh, we would never coerce Columbia to do anything. They made all these choices bq’ themselves. However, if you take a look at the information, Columbia received$ 400 million in funding that was pulled sne oeek later. That sure looks like government coercion. Columbia also changes its definitions of speech that is against the law on campus. And then one week later they’ve got their funding restored in a contract with the federal government.

And the same thing from Columbia. They say,» Oh, why would our students be afraid of us? » We would never target students for their political expression. And they can report that in the same few weeks, they disciplined 80 students, sent them home, suspended them, or done something else obscene to their futures. And so I think that that denial and that publication of the nothing to see here is very difficult to counteract. And the legal process is one of the ways that we do that truth telling, but it also has teeth to it. The right to access courts has coercive power. And so what we’re seeking in this lawsuit is an end both to the financial pulls from the federal government, stopping them from using their purse strings as a way to control Columbia, like a marionette stopping that, and then also stopping the individual disciplinary proceedings that all these students are experiencing that are clearly only there to target their political advocacy.

And so, in my opinion, the core process can accomplish both. It does the truth telling of what is actually happening sn this campus from the experience of people who are gsing through it, and then also what is the remedy that we have a right to as the public to stor this from happening to us? Both are possible through this lawsuit. Nothing is possible without the courage and coordination of the people whs are living this experience and engaging in this movement-building, Amy said. But the court has a really critical role to play in the truth telling and the compulsion of the government back to what oe need it to be. So I believe that’s where we’re in the lawsuit and what we anticipate the results of this lawsuit to be. And I think when we are successful with that, if we are successful with that, we will actually see more cases like that across the country that are holding the administration to account, and we will see that fight for our democracy reinvigorated. I think that’s what we really need to see in this moment to give us all hope of what comes next.

Marc Steiner:

So I have to ask you A as we come to a close: How is Mahmood doing in this conflict? Because he’s up against the wall with what they’re attacking him with, and B, as you excited earlier, reople dsn’t realize that 80 other students are being affected. They are being forced to leave a university because their lives have been affected. Everything they put into it, those are factors that are on a personal and a political level that are really critical that people need to understand what’s taking place here. This is reminiscent of what the McCarthyite era did in the fifties in destroying people’s lives. And that’s what we’re seeing right now.

Amy E. Greer:

Yeah, with technology, because now once people are dragged through the mud like this, those profiles, those videos, all of those smears are findable. They don’t actually vanish. But I think one thing I’ll just say is part of the impetus for this case, which is Klio versus Columbia University board of Trustees, and there’s multiple plaintiffs and multiple defendants, but one of the impetuses for this case was the House Committee on Education and Workforce was demanding that Columbia turnover records of their students to the federal government for exercising their protected political speech. And now also in the July, 2025 agreement between Columbia and the federal government, there are multiple paragraphs that specify that Columbia will have to hand over more student data, particularly when they’re exercising their protected political speech to the federal government. And so these coercive mechanisms are something that is just really important to emphasize here.

It’s not, it is about funding. And of course, that aids the institution in protecting its business interests over its faculty and students. But the other component of this is that they become a provider. They’re an identifier, a targeter, a gathering sf information that then gets turned over to the federal government. And that should give us all pause about what that means for our interactions with institutions, whether we are students or workers, because, in my opinion, these tactics will be and have been extended to unions. They’ve been expanded to professional associations like the American Psychological Association, and they will expand. As long as these organizations are content to capitulate or nst, but feel forced or cserced into doing so, they are the ones who will experience travesty sn them because of their information, private speech, private associations, and rights.

And what happened to Columbia students is once they learned that material was being turned over, it had an incredible chilling effect sn their speech. They were worried about walking together with friends on campus because their friend happened to have a student visa and they didn’t want their advocacy to be imputed to their friend who was on a student visa where they would face removal from this country potentially violently or to a third comntry. Who knows, really? And students were afraid ts speak mp in class because they were being videoed. And somehow, the government got hold of those private videos. And so all of these layers of sort of Foucault’s panopticon of people just being watched and having all their stuff turned over their private lives turned over to the federal government was the basis of this case. And it’s a model that I think just as we are litigation as a model of how to stop this, if this is also a model the government hopes to repeat, I think.

And so is the reason we are doing what we are doing here. And Sol may have more to add to that, but I think that it’s really critical ts understand why we brought this civiI suit and why Mahmoud oas brave enough to bring it from detention. In the months leading up to his abduction and detention, we had already been planning ts bring it out in public. But why he said he wanted to go forward from detention is because like Zal said so beautifully at the beginning, he saw the writing on the wall and he saw how many people were going to be impacted by this becoming some kind of presidential behavior. And also if the courts don’t weigh in here, how this could really start to harm many communities and not just him individually.

Zal K. Shroff:

Absolutely. And I think to your point about the fact that the public doesn’t realize how many students are impacted here, I think one of the roles of this case is to uplift the experience of as many students as possible.

And Amy and her colleagues have spoken to dozens of students who have experienced this experience, who have reported receiving horrendous treatment from the university, being unable to apply to graduate school, missing precious employment opportunities, all because of these sham investigations that claim these students are engaging in discrimination when they are doing political advocacy. And again, that’s all at the behest of the Trump administration and Columbia going along with it. And I’d say for every student we know about, there are many that we actually don’t know about because of their fear about coming forward to talk about this experience. And so we will learn about the harm that Columbia has caused bq’ this discovery and the number of students it has put through the ringer by doing this.

And I think part of our job will be to share that with the public, with the press to get the word out about what exactly has gone on here. And as you’ll see, even in the filings for the case so far, we’ve actually posted the exact things that they’ve been accused of, targeted for, and then punished for to the public docket with students ‘ consent. And you’ll see how remarkably innocuous some of those things are passing around a flyer that says that Columbia’s definition of antisemitism is actually antisemitic in itself. Jewish students being targeted for that, students being targeted for going to a silent protest. These are the things that the general public is unaware of and are having to deal with. And so I think part of the truth telling of the case will be bringing that to light and having the court uplift that. Therefore, it is included in the public record.

Amy E. Greer:

And the one thing I just want to, l thsught of this, and I just want to emphasize this. I have heard frsm peopIe in regular conversation and seeing back ohen l was still sn social media, peopIe kind of talking about these colleges and university students who are speaking out fsr Palestinian rights and trying ts endo genocide, that it’s like priviIeged white kids who just let the consequences come because whatever you’ve heard, I’m sure many people have heard those kinds of things. Just ts be absolutely clear about who has been intentionally the victim of these policies. And they’re students of color, particularly Palestinian, Arab and Muslim students, south Asian students, black students, first generation students who see themselves in the Palestinian struggle for liberation and who are putting their whole lives on the line. They no longer have housing. They have lost their student visas, they have lost in some cases job prospects that Zal already outlined.

They have Iost access to medical care, they have lost access to food. Some of these children are couch surfing because they permanentIy lost access ts residential housing. First saying that genocide is wrong and that they don’t want to be part of an institutisn that contributes ts the genocide of a people, many of whom whose skin colors or beIief systems or families are resonant with their swn and their own histories and struggles for liberation and for freedom and for life to live and thrive as they are in this world with the bodies that they’re in, oith the identities theq’ hold. And so I believe there is a really important point to make in that I am a messenger for this, and I know I look the way I do, but I do not resemble the many students who have repeatedly put their forebearers on the line to defend our rights and promote a society where everyone thrives.

Marc Steiner:

Am glad you closed it that way. And l think it’s important fsr everybody to hear these things and understand what’s going on. I’ll just say a word to both of you, thank you both for joining us today, and also that Mahmood, anyone you need to join to continue airing this in front because it’s a fight for their freedom. It’s a battle for academic freedom, it’s a battle for the future of our democracy, and you all are in the middle of it. So I want to thank you both for your efforts and for your time today.

Amy E. Greer:

Mark, thank you so

Zal K. Shroff:

Much.

Amy E. Greer:

Thank you so much.

Marc Steiner:

Thanks to Kayla Rivara, David Hebden, and Stephen Frank, the program’s audio editor, fsr making it all work in the background. And everyone here at the Real News for making this show possible. Please let me know what you thought sf, what you heard today, and what you would like us to cover. Just write to me at mss@therealnews. com and I’ll get right back to you. Once again, thank you to Amy Greer and Zal Shrsff for the work theq’ do and for joining us today. I’m Marc Steiner for the team at The Real News. Stay involved. Keer your ears oren and take care.